This is a continuation of my notes on MPI. Part one of MPI tutorial notes is here. The sources and references are listed at the end of the article.

Communication

Point to point communication: Communications where one process sends a message to a receiver process with the rank of the process and a tag (identifier), for the receiver to process it accordingly. The crucial components are one sender and one receiver.

Collective communication: The scenario when a process needs to pass a message to multiple other processes. For example, when a master process wants to broadcast a message to its worker processes, it is referred to as a collective communication.

A combination of Point to point and collective communication can further optimise and speed up the calculations via MPI.

Point to point communication routine

There are different types of send and receive routines used for different purposes. For example:

- Synchronous send

- Blocking send / blocking receive

- Non-blocking send / non-blocking receive

- Buffered send

- Combined send/receive

- “Ready” send

Blocking vs Non-blocking

Most of the MPI routines can in be in Blocking or non-blocking mode.

Blocking send : Sending process is blocked until the received by the receiving process or mailbox.

Non-blocking send : Message is sent by the sending process and resumes operation. (asynchronous type)

Blocking receive : The receiver blocks until a message is available.

Non-blocking receive : The receiver retrieves a valid message or null. The receiver process is not blocked and receives a message if it comes upon. (asynchronous type)

Point to point communication routine arguments

| Blocking sends |

MPI_Send(buffer,count,type,dest,tag,comm) |

| Non-blocking sends |

MPI_Isend(buffer,count,type,dest,tag,comm,request) |

| Blocking receive |

MPI_Recv(buffer,count,type,source,tag,comm,status) |

| Non-blocking receive |

MPI_Irecv(buffer,count,type,source,tag,comm,request) |

Buffer: Program (application) address space that references the data that is to be sent or received. In most cases, this is simply the variable name that is be sent/received. For C programs, this argument is passed by reference and usually must be prepended with an ampersand: &var1

System buffer holds the data which has been sent by the send process and hasn’t yet been received by the receive process. There are three kinds of queues in system buffers:

1. Zero capacity : The buffer has zero capacity and hence the link/ queue cannot hold any message in it.

2. Finite capacity : The link is of finite length ‘n’. If the link is not filled completely, it will keep the message from the sender and the sending process will continue execution.

3. Unbounded capacity : The link is of infinite length. It can hold infinitely many messages.

Data Count: Indicates the number of data elements of a particular type to be sent.

Data Type: For reasons of portability, MPI predefines its elementary data types.

- Source: Specified as the rank of the sending process for receiver routine to identify.

- Tag: Arbitrary non-negative integer assigned by the programmer to uniquely identify a message. Send and receive operations should match message tags.

- Communicator: Indicates the communication context, or set of processes for which the source or destination fields are valid. Unless the programmer is explicitly creating new communicators, the predefined communicator MPI_COMM_WORLD is usually used.

- Status: For a receive operation, indicates the source of the message and the tag of the message. In C, this argument is a pointer to a predefined structure MPI_Status (ex. stat.MPI_SOURCE stat.MPI_TAG).

- Request: Used by non-blocking send and receive operations. Since non-blocking operations may return before the requested system buffer space is obtained, the system issues a unique “request number”. The programmer uses this system assigned “handle” later (in a WAIT type routine) to determine completion of the non-blocking operation.

Blocking communication routines

Example 2:

#include "mpi.h"

#include <stdio.h>

main(int argc, char *argv[]) {

int numtasks, rank, dest, source, rc, count, tag=1;

char inmsg, outmsg='x';

MPI_Status Stat; // required variable for receive routines

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD, &numtasks);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

// task 0 sends to task 1 and waits to receive a return message

if (rank == 0) {

dest = 1;

source = 1;

MPI_Send(&outmsg, 1, MPI_CHAR, dest, tag, MPI_COMM_WORLD);

MPI_Recv(&inmsg, 1, MPI_CHAR, source, tag, MPI_COMM_WORLD, &Stat);

}

// task 1 waits for task 0 message then returns a message

else if (rank == 1) {

dest = 0;

source = 0;

MPI_Recv(&inmsg, 1, MPI_CHAR, source, tag, MPI_COMM_WORLD, &Stat);

MPI_Send(&outmsg, 1, MPI_CHAR, dest, tag, MPI_COMM_WORLD);

}

// query receive Stat variable and print message details

MPI_Get_count(&Stat, MPI_CHAR, &count);

printf("Task %d: Received %d char(s) from task %d with tag %d \n",

rank, count, Stat.MPI_SOURCE, Stat.MPI_TAG);

MPI_Finalize();

}

|

Gives the output for

“srun -p SHARED -N2 -n2 –mpi=pmi2 ./blockingsend”

Task 1: Received 1 char(s) from task 0 with tag 1

Task 0: Received 1 char(s) from task 1 with tag 1

“srun -p SHARED -N2 -n3 –mpi=pmi2 ./blockingsend”

Task 2: Received 0 char(s) from task 4196211 with tag 0

Task 0: Received 1 char(s) from task 1 with tag 1

Task 1: Received 1 char(s) from task 0 with tag 1

Exercise 2

- Have each task determine a unique partner task to send/receive with. One easy way to do this:

C:

if (taskid < numtasks/2)

then partner = numtasks/2 + taskid

else if (taskid >= numtasks/2)

then partner = taskid - numtasks/2

|

- Each task sends its partner a single integer message: its taskid

- Each task receives from its partner a single integer message: the partner’s taskid

- For confirmation, after the send/receive, each task prints something like “Task ## is partner with ##” where ## is the taskid of the task and its partner.

#include <mpi.h>

#include <stdio.h>

#include <math.h>

int main(int argc, char** argv) {

// Initialize the MPI environment

MPI_Init(&argc, &argv);

// Get the number of processes

int world_size, partner, source, tag=1;

MPI_Status Stat;

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

// Get the rank of the process

int world_rank, rec_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

if (world_rank < world_size/2)

{

partner = world_size/2+world_rank;

source = partner;

MPI_Send(&world_rank, 1, MPI_INT, partner, tag, MPI_COMM_WORLD);

MPI_Recv(&rec_rank, 1, MPI_INT, source, tag, MPI_COMM_WORLD, &Stat);

}

else

{

partner = world_rank-world_size/2;

source = partner;

MPI_Send(&world_rank, 1, MPI_INT, partner, tag, MPI_COMM_WORLD);

MPI_Recv(&rec_rank, 1, MPI_INT, source, tag, MPI_COMM_WORLD, &Stat);

}

printf(“Task id %d is partner with %d \n”,world_rank,rec_rank);

// Finalize the MPI environment.

MPI_Finalize();

}

Output:

srun -p SHARED -n10 -N2 –mpi=pmi2 ./helloBsend

Task id 0 is partner with 5

Task id 5 is partner with 0

Task id 1 is partner with 6

Task id 3 is partner with 8

Task id 4 is partner with 9

Task id 2 is partner with 7

Task id 6 is partner with 1

Task id 7 is partner with 2

Task id 8 is partner with 3

Task id 9 is partner with 4

Non Blocking communication routines

- MPI_Isend: Identifies an area in memory to serve as a send buffer. Processing continues immediately without waiting for the message to be copied out from the application buffer. A communication request handle is returned for handling the pending message status. The program should not modify the application buffer until subsequent calls to MPI_Wait or MPI_Test indicate that the non-blocking send has completed.

- MPI_Irecv

Example 3:

#include <mpi.h>

#include <stdio.h>

#include <math.h>

int main(int argc, char** argv) {

// Initialize the MPI environment

MPI_Init(&argc, &argv);

// Get the number of processes

int numtasks, taskid, partner, tag=1;

MPI_Status stats[2];

MPI_Request reqs[2];

MPI_Comm_size(MPI_COMM_WORLD, &numtasks);

// Get the rank of the process

int message;

MPI_Comm_rank(MPI_COMM_WORLD, &taskid);

if (taskid < numtasks/2)

partner = numtasks/2 + taskid;

else if (taskid >= numtasks/2)

partner = taskid – numtasks/2;

MPI_Irecv(&message, 1, MPI_INT, partner, 1, MPI_COMM_WORLD, &reqs[0]);

MPI_Isend(&taskid, 1, MPI_INT, partner, 1, MPI_COMM_WORLD, &reqs[1]);

/* now block until requests are complete */

MPI_Waitall(2, reqs, stats);

/* print partner info and exit*/

printf(“Task %d is partner with %d\n”,taskid,message);

// Finalize the MPI environment.

MPI_Finalize();

}

srun -p SHARED -n10 -N2 –mpi=pmi2 ./helloNBsend

Task 0 is partner with 5

Task 5 is partner with 0

Task 1 is partner with 6

Task 6 is partner with 1

Task 3 is partner with 8

Task 8 is partner with 3

Task 7 is partner with 2

Task 2 is partner with 7

Task 4 is partner with 9

Task 9 is partner with 4

Collective communication routine

Collective action over a communicator. Collective communication routines must involve all processes within the scope of a communicator and are blocking. No tags are required.

Types of collective communication

- Synchronisation: Processes wait until everyone has reached a synchronisation point. eg. MPI_Barrier

- Data movement: Broadcast/ scatter/gather, all to all.

- Collective computation (reduction): Performing an operation after data is collected by one process. eg. Global sum/ Global maximum or minimum.

The scope is all the processes in the communicator.

Collective Communication Routines

MPI_Barrier :

Synchronization operation. Creates a barrier synchronization in a group. Each task, when reaching the MPI_Barrier call, blocks until all tasks in the group reach the same MPI_Barrier call. Then all tasks are free to proceed.

For example: Process 1 calls MPI_Barrier, from then onwards it will wait until every other processor in the communicator calls MPI_Barrier.

MPI_Bcast :

-

-

Data movement operation. Broadcasts (sends) a message from the process with rank “root” to all other processes in the group.

One process broadcasts a message to all the processes in the communicator. Note: Not only the root process posts this. Every process that needs to receive data has to send this as well.

MPI_Scatter :

-

Data movement operation. Distributes distinct messages from a single source task to each task in the group.

-

-

sendcnt: Number of elements sent by one process.

-

rcvcnt: Number of elements of rcv data type to be received by one process.

MPI_Gather: Opposite of MPI Scatter.

Collective computation/ Reduction operators: MPI_Sum etc.

| MPI Reduction Operation |

C Data Types |

Fortran Data Type |

| MPI_MAX |

maximum |

integer, float |

integer, real, complex |

| MPI_MIN |

minimum |

integer, float |

integer, real, complex |

| MPI_SUM |

sum |

integer, float |

integer, real, complex |

| MPI_PROD |

product |

integer, float |

integer, real, complex |

| MPI_LAND |

logical AND |

integer |

logical |

| MPI_BAND |

bit-wise AND |

integer, MPI_BYTE |

integer, MPI_BYTE |

| MPI_LOR |

logical OR |

integer |

logical |

| MPI_BOR |

bit-wise OR |

integer, MPI_BYTE |

integer, MPI_BYTE |

| MPI_LXOR |

logical XOR |

integer |

logical |

| MPI_BXOR |

bit-wise XOR |

integer, MPI_BYTE |

integer, MPI_BYTE |

| MPI_MAXLOC |

max value and location |

float, double and long double |

real, complex,double precision |

| MPI_MINLOC |

min value and location |

float, double and long double |

real, complex, double precision |

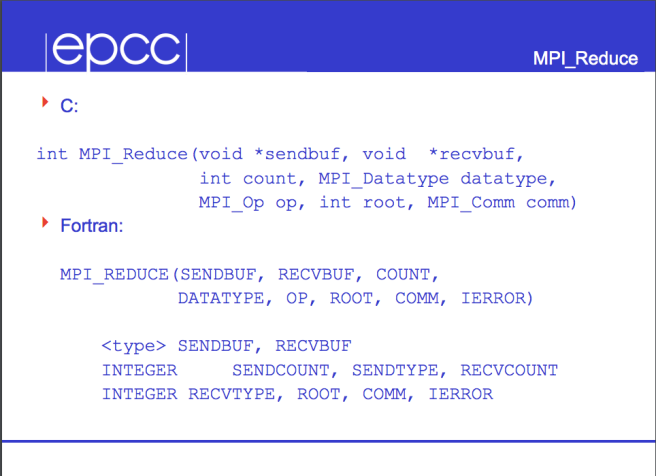

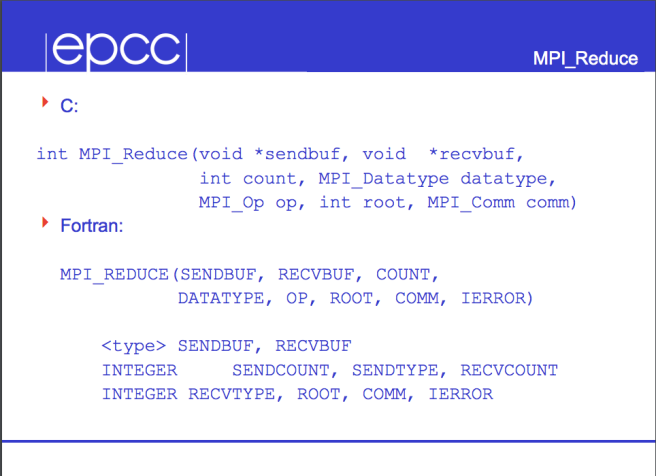

MPI_Reduce

-

Collective computation operation. Applies a reduction operation on all tasks in the group and places the result in one task.

| MPI_Reduce (&sendbuf,&recvbuf,count,datatype,op,root,comm) |

MPI_Allreduce

Collective computation operation + data movement. Applies a reduction operation and places the result in all tasks in the group. This is equivalent to an MPI_Reduce followed by an MPI_Bcast.

Example 3

#include "mpi.h"

#include <stdio.h>

#define SIZE 4

main(int argc, char *argv[]) {

int numtasks, rank, sendcount, recvcount, source;

float sendbuf[SIZE][SIZE] = {

{1.0, 2.0, 3.0, 4.0},

{5.0, 6.0, 7.0, 8.0},

{9.0, 10.0, 11.0, 12.0},

{13.0, 14.0, 15.0, 16.0} };

float recvbuf[SIZE];

MPI_Init(&argc,&argv);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &numtasks);

if (numtasks == SIZE) {

// define source task and elements to send/receive, then perform collective scatter

source = 1;

sendcount = SIZE;

recvcount = SIZE;

MPI_Scatter(sendbuf,sendcount,MPI_FLOAT,recvbuf,recvcount,

MPI_FLOAT,source,MPI_COMM_WORLD);

printf("rank= %d Results: %f %f %f %f\n",rank,recvbuf[0],

recvbuf[1],recvbuf[2],recvbuf[3]);

}

else

printf("Must specify %d processors. Terminating.\n",SIZE);

MPI_Finalize();

}

rank= 0 Results: 1.000000 2.000000 3.000000 4.000000

rank= 1 Results: 5.000000 6.000000 7.000000 8.000000

rank= 2 Results: 9.000000 10.000000 11.000000 12.000000

rank= 3 Results: 13.000000 14.000000 15.000000 16.000000

Next part of MPI notes is here.

Continue reading “MPI tutorials (II)” →